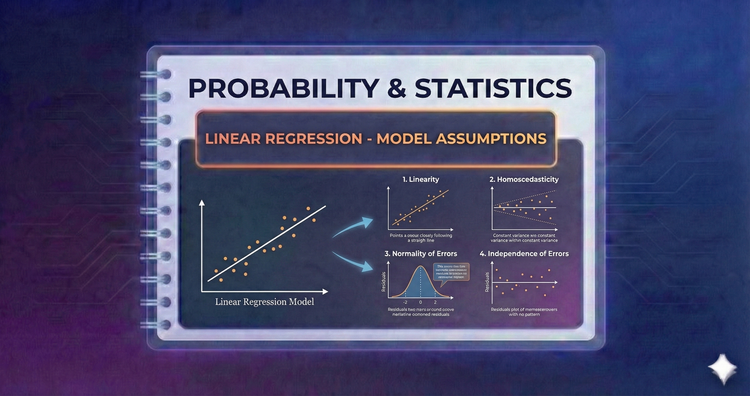

Probability & Statistics - Linear Regression - Model Assumptions

Regression relies on strict rules: Linearity, Independence, Homoscedasticity (constant variance), and Normality of errors. If these assumptions are violated, the model's predictions and p-values become unreliable or misleading.

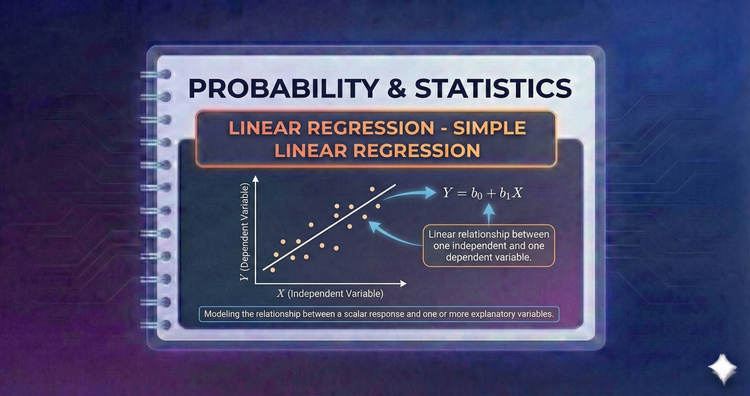

Probability & Statistics - Linear Regression - Simple Linear Regression

The "line of best fit." We use the Least Squares method to draw a straight line through data points, minimizing the error. It models the direct relationship between an input and an output, allowing us to predict future values.

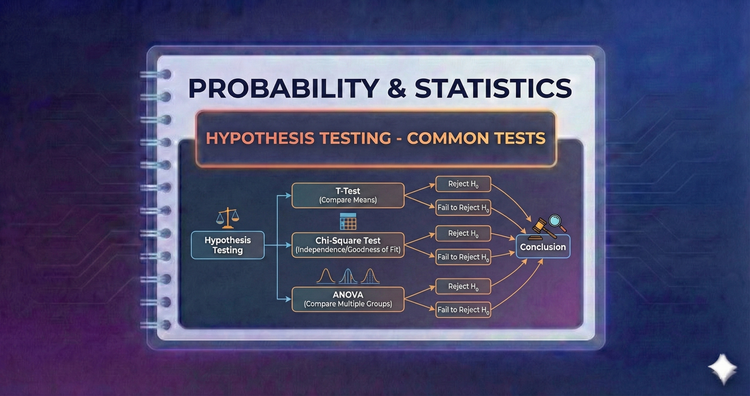

Probability & Statistics - Hypothesis Testing - Common Tests

We survey the toolkit: One-sample t-tests for benchmarks, Two-sample

t-tests for comparing groups (A/B testing), and Paired tests for before-after data. Selecting the correct test is crucial for valid conclusions.

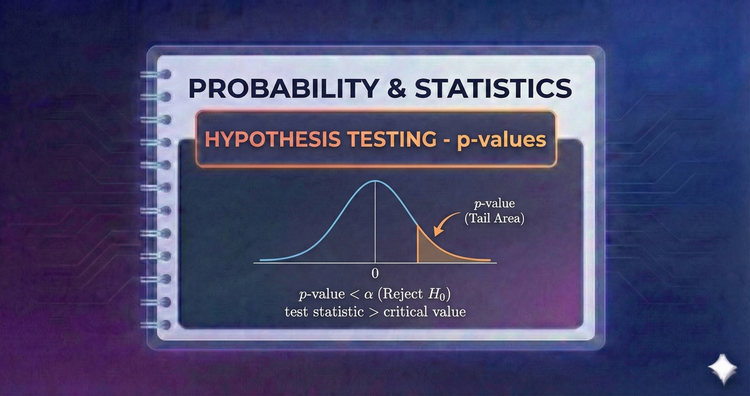

Probability & Statistics - Hypothesis Testing - p-values

The p-value measures evidence against H0. It is the probability of observing data this extreme assuming H0 is true. A low p-value (e.g., < 0.05) signals that the observed result is unlikely to be a fluke, suggesting H0 is false.

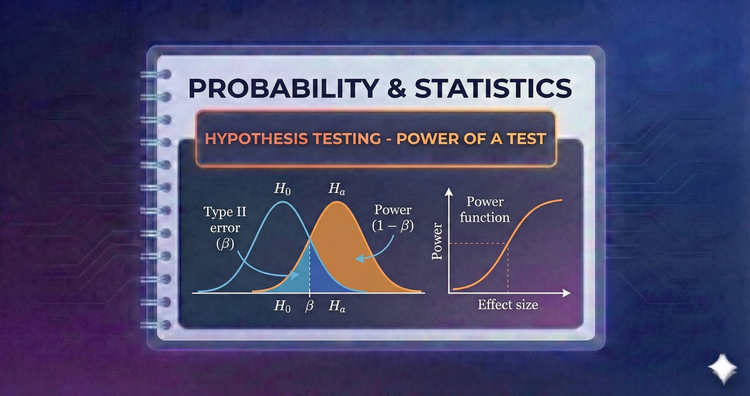

Probability & Statistics - Hypothesis Testing - Power of a Test

Power is the ability of a test to detect a real effect. A powerful test rarely misses a discovery. We boost power by increasing sample size or reducing noise, ensuring our study is sensitive enough to find what we are looking for.

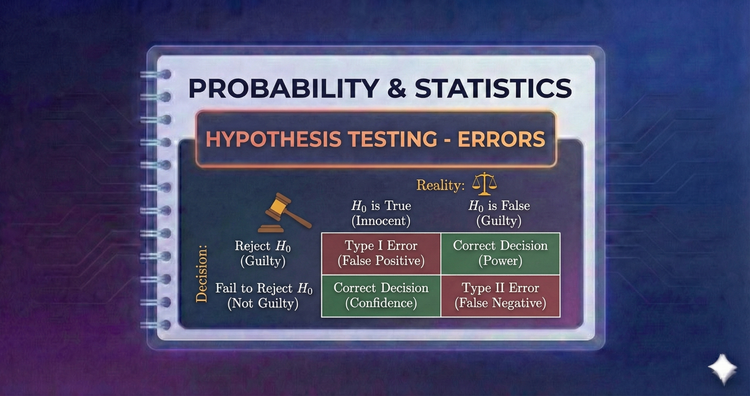

Probability & Statistics - Hypothesis Testing - Errors

Decisions carry risk. A Type I Error is a False Positive (rejecting a true H0). A Type II Error is a False Negative (missing a real effect). We design tests to minimize these risks, balancing the cost of a false alarm against a missed discovery.

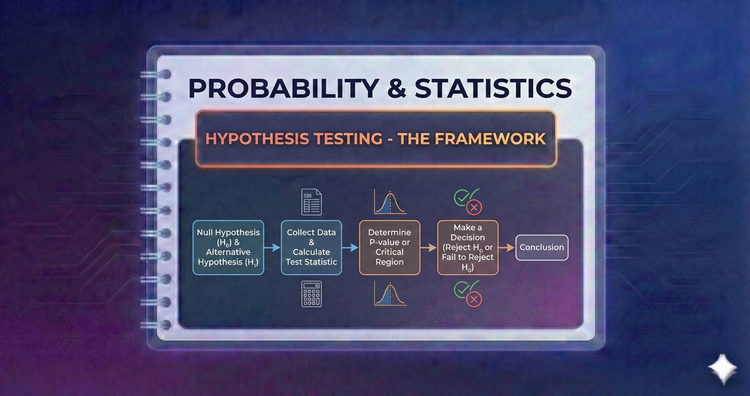

Probability & Statistics - Hypothesis Testing - The Framework

The scientific method in math form. We pit a Null Hypothesis (H0, status quo) against an Alternative (H1, the discovery). We assume H0 is true and only reject it if the data provides overwhelming evidence to the contrary.

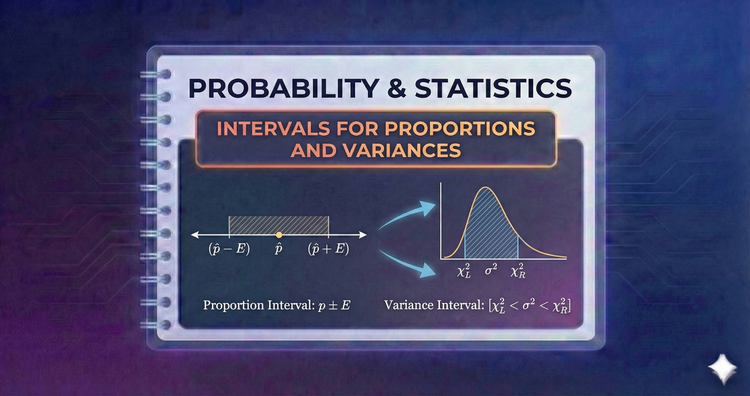

Probability & Statistics - Intervals for Proportions and Variances

We extend intervals to proportions (for polling data) and variances (for quality control). Using Normal approximations for proportions and Chi-Square for variances, we can bound the likely percentage of voters or the consistency of a machine.

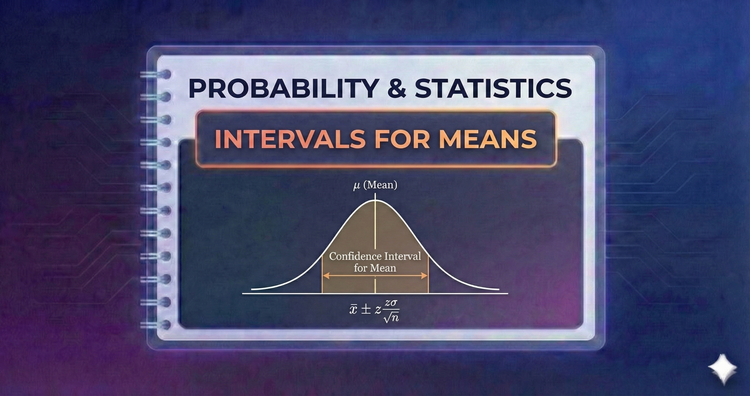

Probability & Statistics - Intervals for Means

We build intervals for the mean using Z-scores or t-scores. This distinction is vital: using the t-distribution accounts for the extra uncertainty in small samples, ensuring our bounds remain accurate.

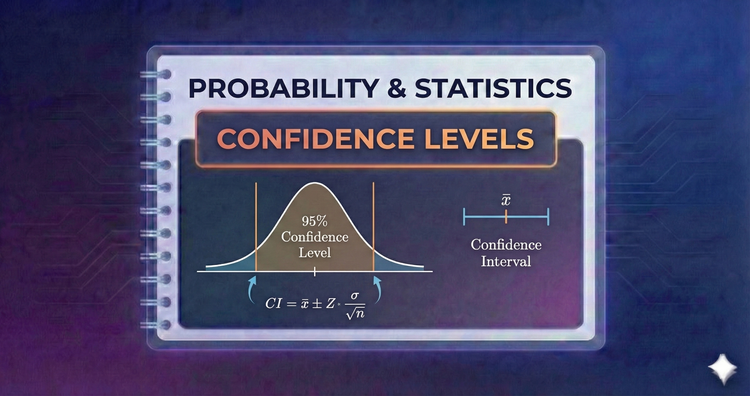

Probability & Statistics - Confidence Levels

What does "95% confident" mean? It refers to the method, not the specific interval. It implies that if we repeated the sampling 100 times, 95 of the resulting intervals would capture the true population parameter. It measures reliability.