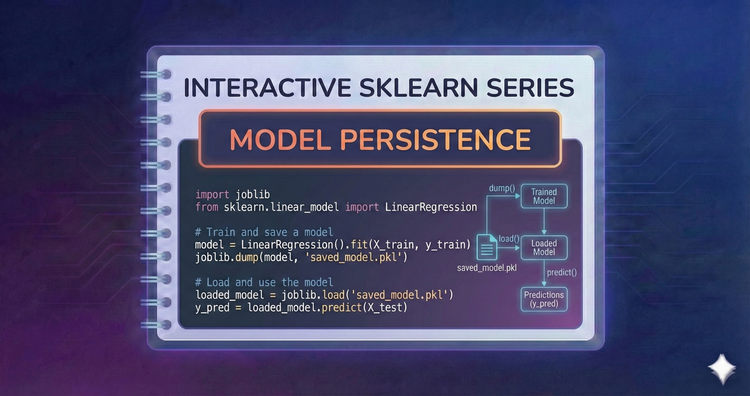

Interactive SkLearn Series - Model Persistence

Save your work. We’ll use joblib to serialize trained models to disk, allowing you to reload them later for inference without needing to retrain from scratch.

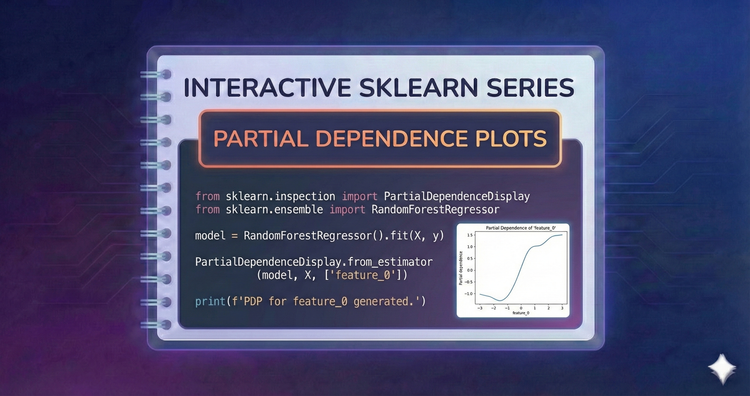

Interactive SkLearn Series - Partial Dependence Plots

Visualize cause and effect. We’ll generate Partial Dependence Plots (PDPs) to see exactly how changing a specific feature impacts the model’s predicted outcome, holding others constant.

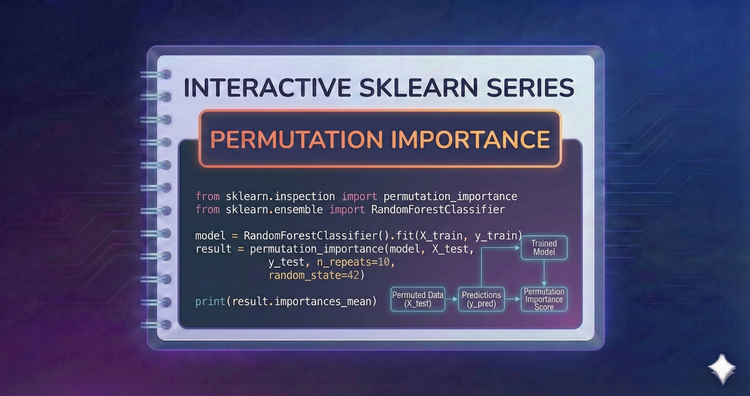

Interactive SkLearn Series - Permutation Importance

Meaningful interpretation. We’ll inspect model internals by shuffling feature values and measuring the drop in performance, determining which features truly drive predictions.

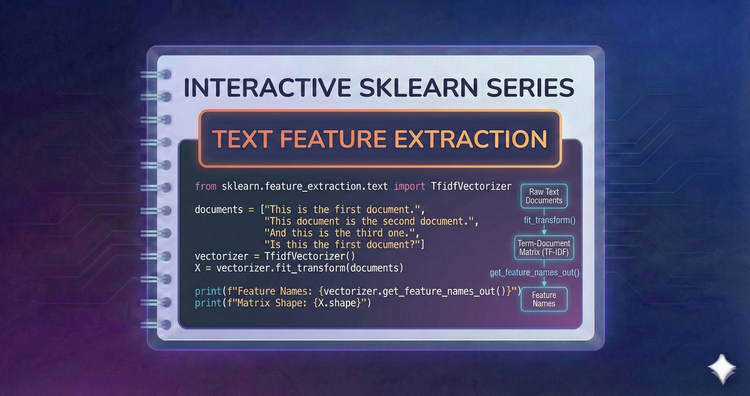

Interactive SkLearn Series - Text Feature Extraction

Turn text into math. We’ll use CountVectorizer for bag-of-words and TfidfVectorizer to weigh word importance, preparing raw text documents for machine learning algorithms.

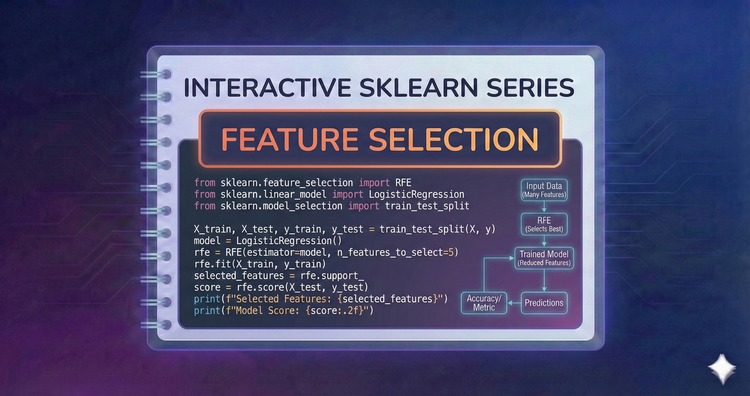

Interactive SkLearn Series - Feature Selection

Less is often more. We’ll use Recursive Feature Elimination (RFE) and SelectFromModel to automatically identify and keep only the most predictive features, improving model speed.

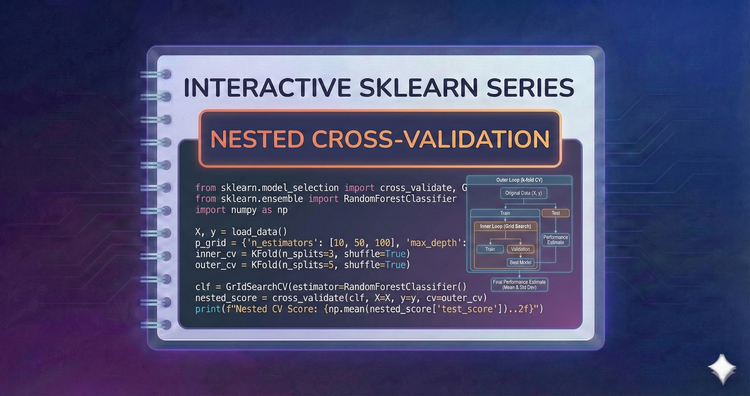

Interactive SkLearn Series - Nested Cross-Validation

The gold standard for evaluation. We’ll implement Nested CV to separate hyperparameter tuning from model evaluation, providing an unbiased estimate of generalization error.

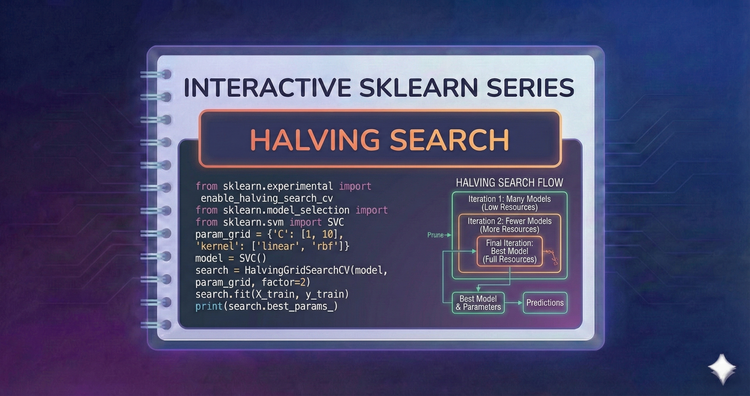

Interactive SkLearn Series - Halving Search

Speed up tuning with "successive halving." We’ll use HalvingGridSearchCV to quickly discard poor parameter combinations on small data subsets, focusing resources on promising candidates.

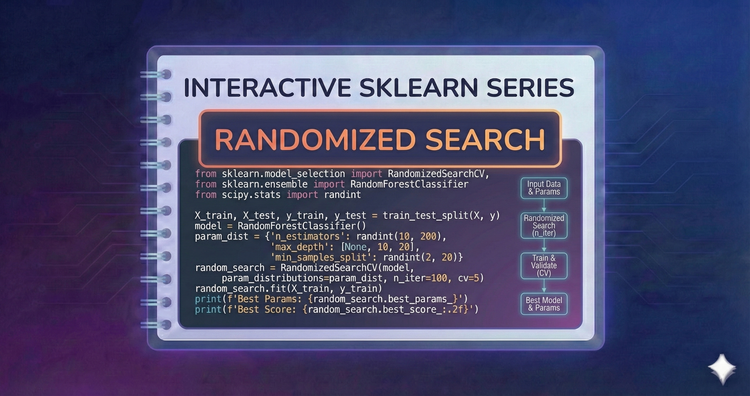

Interactive SkLearn Series - Randomized Search

Tune efficiently. When the search space is huge, RandomizedSearchCV samples a fixed number of configurations, often finding equal or better models than grid search in a fraction of the time.

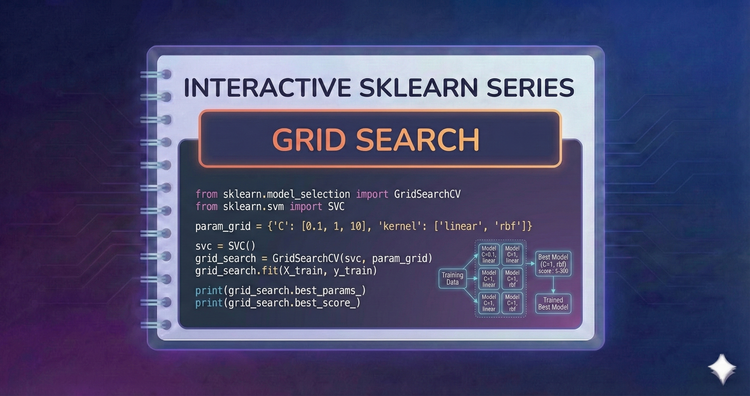

Interactive SkLearn Series - Grid Search

Stop guessing parameters. We’ll use GridSearchCV to exhaustively test every combination of hyperparameters, finding the optimal configuration for your model automatically.

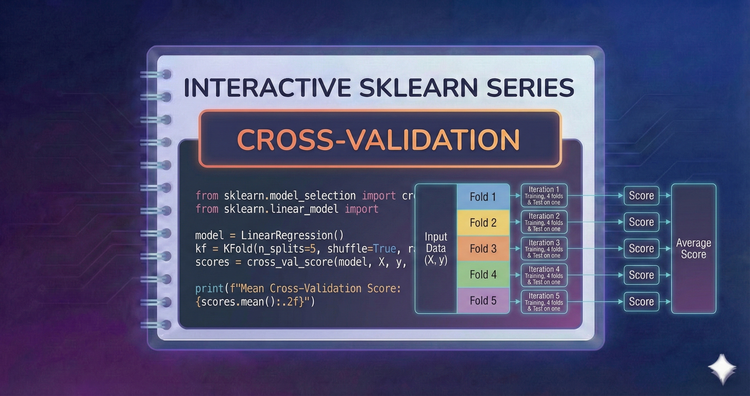

Interactive SkLearn Series - Cross-Validation

Validate with confidence. We’ll move beyond simple splits to K-Fold and Stratified K-Fold cross-validation, ensuring your model's performance metric is statistically robust and reliable.