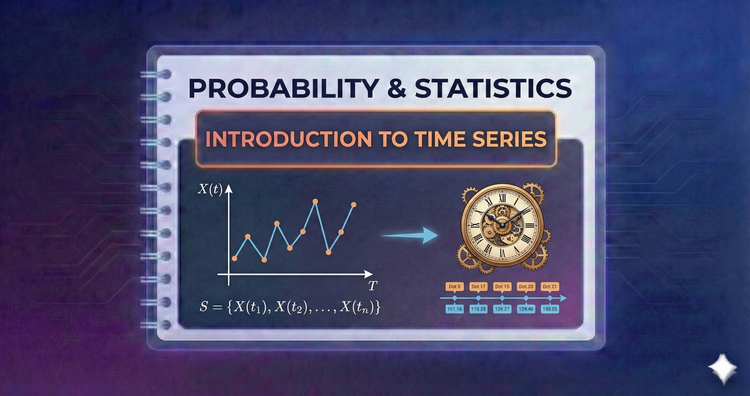

Probability & Statistics - Introduction to Time Series

Data often depends on when it happened. We explore temporal structure, autocorrelation (correlation with past values), and trends, moving beyond independent samples to model history and forecast future values.

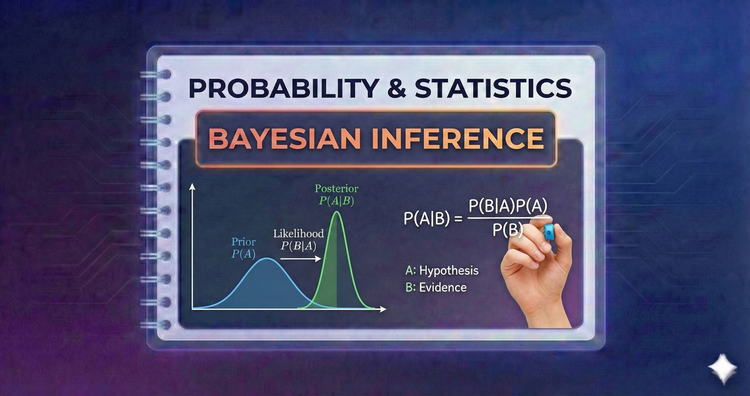

Probability & Statistics - Bayesian Inference

A shift in philosophy. Instead of fixed parameters, we treat them as random variables. We combine prior beliefs with observed data (likelihood) to calculate a "Posterior" probability, mathematically updating our view of the world.

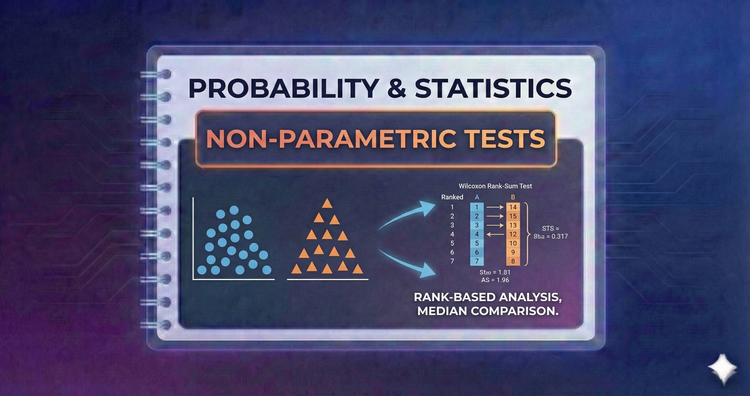

Probability & Statistics - Non-Parametric Tests

When data isn't Normal or sample sizes are tiny, standard tests fail. We use methods based on ranks (like Mann-Whitney or Kruskal-Wallis) to draw valid conclusions without assuming a specific distribution shape (Normality).

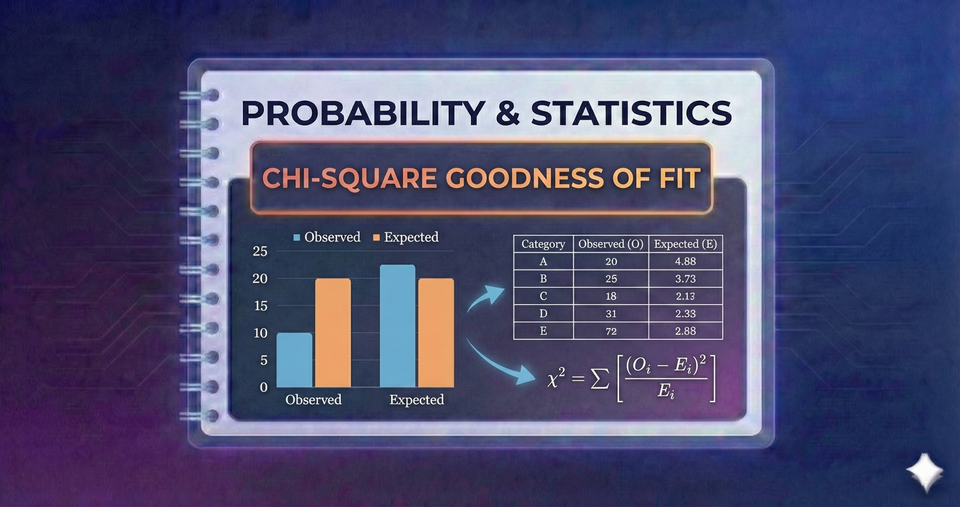

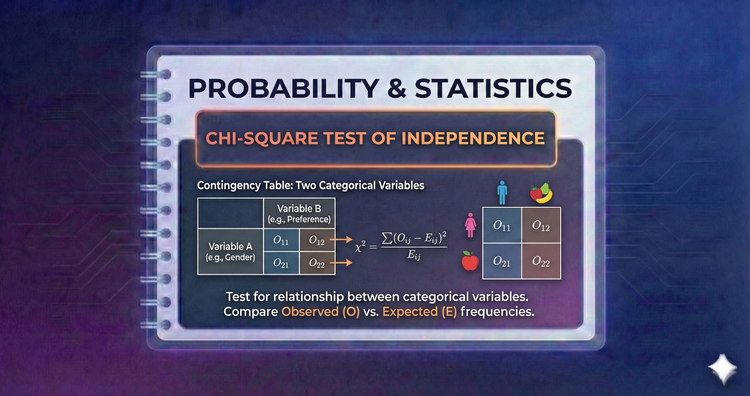

Probability & Statistics - Chi-Square Test of Independence

Are two categorical variables (like "Gender" and "Voting Preference") related? This test checks if knowing one variable helps predict the other. If the variables are independent, the observed pattern will match random chance.

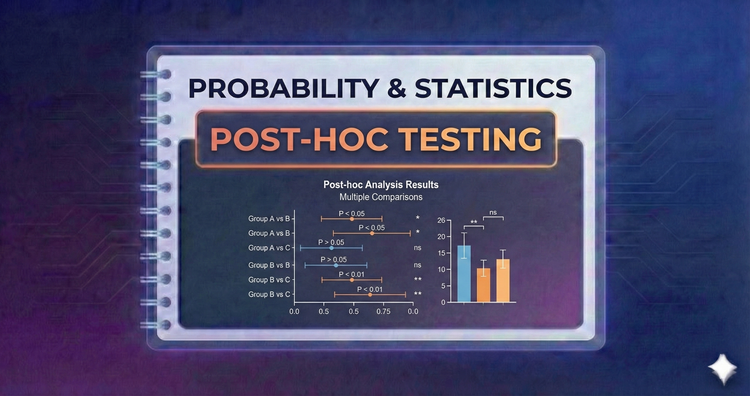

Probability & Statistics - Post-hoc Testing

ANOVA tells us "there is a difference," but not where. Post-hoc tests (like Tukey’s HSD) act as detectives after a significant ANOVA result, comparing specific pairs of groups while correcting for false positives to identify the exact outlier.

Member discussion